In 2018, I've written an article about Clickhouse, this piece of content is still pretty popular across the internet, and even was translated a few times. More than two years have passed since, and the pace of Clickhouse development is not slowing down: 800 merged PRs just during last month! This didn't blow your mind? Check out the full changelog, for example for 2020: https://clickhouse.tech/docs/en/whats-new/changelog/2020/ The description of just new features for each year may take an hour to go through.

Mar 02, 2021 (Metabase, which we adore, also got Clickhouse support!) But, in our case, in ApiRoad.net project, we are building customer-facing analytics, so we have to build analytics GUI from scratch, anyways (we are using a wonderful stack of Laravel, Inertia.js, Vue.js, and Charts.js to implement the customer portal, by the way). As you know, ClickHouse DBMS does not have any explicit foreign keys, so the driver cannot help MetaBase discover the relationships between your fact table and the dimension tables. Have you tried modelling your schema's relations using the MetaBase admin overview? If this does not work for you, what kind of errors do you encounter? Super impressed with @metabase! We are using it internally for a dashboard and it really offers a great combination of ease of use, flexibility, and speed. Paavo Niskala (@Paavi) December 17, 2019. @metabase is the most impressive piece of software I’ve used in a long time. If you have data you want to understand give it a try. To everyone else, until there's a 2.0 or self-hosted version of this, Hasura+Metabase was recommended for this in a HN thread last year and looks pretty neat, if not covering the exact same usecases. (Yes, I realize that the same is true of a database hosted in AWS/Azure/GCP.

For the sake of honest comparison, ElasticSearch repo has jaw-dropping 1076 PRs merged for the same month, and in terms of features, their pace is very impressive, as well!

We are using Clickhouse for log storage and analytics in ApiRoad.net project (which is an API marketplace where developers sell their APIs, still in active development) and we are happy with the results so far. As an API developer myself, I know how important is the observability and analysis of HTTP request/response cycle to maintain the quality of service and quickly detect bugs, this is especially true for pure API service. (If you are an API author and want to utilize ApiRoad analytics & billing plaftform to sell API subscriptions, drop me a message at contact@apiroad.net with your API description – I will be happy to chat!)

We are also using ELK (ElasticSearch, Logstash, filebeat, Kibana) stack on other projects, for very similar purposes - getting http and mail logs, for later analysis and search via Kibana.

And, of course, we use MySQL. Everywhere!

Eidetic memory test. This post is about the major reasons why we chose Clickhouse and not ElasticSearch (or MySQL) as a storage solution for ApiRoad.net essential data - request logs (Important note: we still use MySQL there, for OLTP purposes).

1. SQL support, JSON and Arrays as first class citizens.

SQL is a perfect language for analytics. I love SQL query language and SQL schema is a perfect example of boring tech that I recommend to use as a source of truth for all the data in 99% of projects: if the project code is not perfect, you can improve it relatively easily if your database state is strongly structured. If your database state is a huge JSON blob (NoSQL) and no-one can fully grasp the structure of this data, this refactoring usually gets much more problematic.

I saw this happening, especially in older projects with MongoDB, where every new analytics report and every new refactoring involving data migration is a big pain. Starting such projects is fun – as you don't need to spend your time carefully designing the complete project schema, just 'see how it goes' – but maintaining them is not fun!

But, it is important to note that this rule of thumb - 'use strict schema' - is not that critical for log storage use cases. That's why ElasticSearch is so successful, it has many strong sides, and flexible schema.

Back to JSON: traditional RDBMS are still catching up with NoSQL DBMS in terms of JSON querying and syntax, and we should admit JSON is a very convenient format for dynamic structures (like log storage).

Clickhouse is a modern engine that was designed and built when JSON was already a thing (unlike MySQL and Postgres), and Clickhouse does not have to carry the luggage of backward compatibility and strict SQL standards of these super-popular RDBMS, so Clickhouse team can move fast in terms of features and improvements, and they indeed move fast. Developers of Clickhouse had more opportunities to hit a sweet balance between strict relative schemas and JSON flexibility, and I think they did a good job here. Clickhouse tries to compete with Google Big Query and other big players in the analytics field, so it got many improvements over 'standard' SQL, which makes its syntax a killer combo and in a lot of cases much better than you get in traditional RDBMS, for analytics and various calculation purposes.

Some basic examples:

In MySQL, you can extract json fields, but complex JSON processing, like joining relational data on JSON data, became available only recently, from version 8 with JSON_TABLE function. In PosgreSQL, the situation is even worse - no direct JSON_TABLE alternative until PostgreSQL 12!

Compare it to Clickhouse JSON and related arrays feature set - it is just miles ahead. Links:

These are useful in a lot of cases where you would use generate_series() in PostgreSQL. A concrete example from ApiRoad: we need to map requests amount on chart.js timeline. If you do regular SELECT . group by day, you will get gaps if some days did not have any queries. And we don't need gaps, we need zeros there, right? This is exactly where generate_series() function is useful in PostgreSQL. In MySQL, the recommendation is to create stub table with calendar and join on it.. not too elegant, huh?

Here is how to do it in ElasticSearch: https://www.elastic.co/guide/en/elasticsearch/reference/current/search-aggregations-bucket-datehistogram-aggregation.html#_missing_value_2

Regarding the query language: I am still not comfortable with verbosity and approach of ElasticSearch Lucene syntax, HTTP API, and all these json structures that you need to write to retrieve some data. SQL is my preferred choice.

Here is the Clickhouse solution for dates gap filling:

Here, we generate virtual table via lambda function and loop, and then left join it on results from logs table grouped by day.

I think arrayJoin + arrayMap + range functions allow more flexibility than generate_series() from Postgres or ElasticSearch approach. There is also WITH FILL keyword available for a more concise syntax.

2. Flexible schema - but strict when you need it

For log storage tasks, the exact data schema often evolves during project lifetime, and ElasticSearch allows you to put huge JSON blob into index and later figure out the field types and indexing part. Clickhouse allows to use the same approach. You can put data to JSON field and filter it relatively quickly, though it won't be quick on terabyte scale. Then, when you see you often need fast query execution on specific data field, you add materialized columns to your logs table, and these columns extract values from existing JSON on-the-fly. This allows much faster queries on terabytes of data.

I recommend this video from Altinity on the topic of JSON vs Tabular schema for log data storage:

3. Storage and Query Efficiency

Clickhouse is very fast in SELECTs, this was discussed in the previous article.

What is interesting, there is a piece of evidence that Clickhouse can be 5-6 times more efficient in storage, comparing to ElasticSearch, while also being literally an order of magnitude faster in terms of queries.Another one (in Russian)

There are no direct benchmarks, at least I could not find any, I believe because Clickhouse and ElasticSearch are very different in terms of query syntax, cache implementations, and their overall nature.

If we talk about MySQL, any imperfect query, missing index, on a table with mere 100 million rows of log data can make your server crawl and swap, MySQL is not really suited for large-scale log queries. But, in terms of storage, compressed InnoDB tables are surprisingly not that bad. Of course, it's much worse in terms of compression comparing to Clickhouse (sorry, no URLs to benchmarks to support the claim this time), due to its row-based nature, but it still often manages to reduce cost significantly without a big performance hit. We use compressed InnoDB tables for some cases for small-scale log purposes.

4. Statistics functions

Getting median and .99 percentile latency of 404 queries is easy in Clickhouse:

Notice usage of quantileTiming function and how currying is elegantly used here. Clickhouse has generic quantile function! But quantileTiming is optimized for working with sequences which describe distributions like loading web pages times or backend response times.

Metabase Github

There are more than that. Want weighted arithmetic mean? Want to calculate linear regression? this is easy, just use specialized function.

Here is a full list of statistics functions of Clickhouse:

Most of these are problematic to get in MySQL.

ElasticSearch is much better in this than MySQL, it has both quantiles and weighted medians, but it still does not have linear regression.

5. MySQL and Clickhouse tight integration

MySQL and Clickhouse has integrations on multiple levels, which make it easy to use them together with minimum of data duplication:

- MySQL database engine - similar as previous one but dynamic, without binlog

- MySQL table function to connect to MySQL table in specific SELECT query

- MySQL table engine to describe specific table statically in CREATE TABLE statement

I can't say for sure how fast and stable dynamic database engines and table engines work on JOINs, this definitely requires benchmarks, but the concept is very appealing - you have full up-to-date clone of your MySQL tables on your Clickhouse database, and you don't have to deal with cache invalidation and reindexing.

Regarding using MySQL with Elasticsearch, my limited experience says that these two techonologies are just too different and my impression is that they are speaking foreign languages, and do no play 'together', so what I usually did is just JSONify all my data that I needed to index in ElasticSearch, and send it to ElasticSearch. Then, after some migration or any other UPDATE/REPLACE happen on MySQL data, I try to figure out the re-indexing part on Elasticseach side. Here is an article of the Logstash powered approach to sync MySQL and ElasticSearch. I should say I don't really enjoy Logstash for it's mediocre performance, and RAM requirements, and since it is another moving part which can break. This syncing and re-indexing task is often a significant stop factor for us to use Elasticsearch in simple projects with MySQL.

6. New Features

Want to attach S3 stored CSV and treat it as table in Clickhouse? Easy.

Want to update or delete log rows to be compilant with GDPR? Now, this is easy!

There was no clean way to delete or update data in Clickhouse in 2018 when my first article was written, and it was a real downside. Now, it's not an issue anymore. Clickhouse leverages custom SQL syntax to delete rows:

This is implemented like this to be explicit that deleting is still a pretty expensive operation for Clickhouse (and other columnar databases) and you should not do it every second on production.

7. Cons

There are cons for Clickhouse, comparing to ElasticSearch. First of all, if you build internal analytics for log storage, you do want to get the best GUI tool out there. And Kibana is good nowadays for this purpose when you compare it to Grafana (at least, this point of view is very popular on the Internet, Grafana UI is not that slick sometimes). And you have to stick to Grafana or Redash if you use Clickhouse. (Metabase, which we adore, also got Clickhouse support!)

Download continueiλn cloud. But, in our case, in ApiRoad.net project, we are building customer-facing analytics, so we have to build analytics GUI from scratch, anyways (we are using a wonderful stack of Laravel, Inertia.js, Vue.js, and Charts.js to implement the customer portal, by the way).

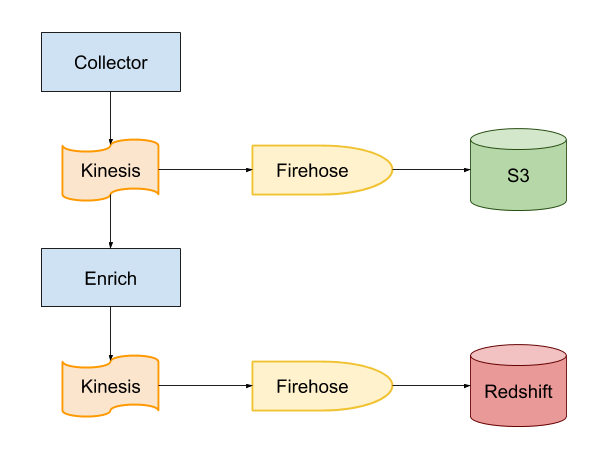

Another issue, related to the ecosystem: the selection of tools to consume, process data and send them to Clickhouse is somewhat limited. For Elasticsearch, there are Logstash and filebeat, tools native to Elastic ecosystem, and designed to work fine together. Luckily, Logstash can also be used to put data to Clickhouse, this mitigates the issue. In ApiRoad, we are using our own custom-built Node.js log shipper which aggregates logs and then sends them to Clickhouse in a batch (because Clickhouse likes big batches and does not like small INSERTs).

What I don't like in Clickhouse is also weird naming of some functions, which are there because Clickhouse was created for Yandex.Metrika (Google Analytics competitor), e.g. visitParamHas() is a function to check if a key exists in JSON. Generic purpose, bad non-generic name. I should mention that there is a bunch of fresh JSON functions with good names: e.g. JSONHas(), with one interesting detail: they are using different JSON parsing engine, more standards-compliant but a bit slower, as far as I understand.

Conclusion

ElasticSearch is a very powerful solution, but I think its strongest side is still huge setups with 10+ nodes, used for large-scale full-text search and facets, complex indexing, and score calculation – this is where ElasticSearch shines. When we talk about time-series and log storage, my feeling is there are better solutions, and Clickhouse is one of them. ElasticSearch API is enormous, and in a lot of cases it's hard to remember how to do one exact thing without copypasting the exact HTTP request from the documentation, it just feels 'enterprisy' and 'Java-flavored'. Both Clickhouse and ElasticSearch are memory hungry apps, but RAM requirements for minimal Clickhouse production installation is 4GB, and for ElasticSearch it is around 16GB. I also think Elastic team focus is getting pretty wide and blurred with all the new amazing machine-learning features they deploy, my humble opinion is that, while these features sound very modern and trendy, this enormous feature set is just impossible to support and improve, no matter how many devs and money you have, so ElasticSearch more and more gets into 'Jack of all trades, master of none' category for me. Maybe I am wrong.

Clickhouse just feels different. Setup is easy. SQL is easy. Console client is wonderful. Everything just feels so light and makes sense, even for smaller setups, but rich features, replicas, and shards for terabytes of data are there when you need it.

Good external links with further info on Clickhouse:

UPD: this post hit top#1 on HackerNews, useful comments there, as usual!

Best comments:

ClickHouse is incredible. It has also replaced a large, expensive and slow Elasticsearch cluster at Contentsquare. We are actually starting an internal team to improve it and upstream patches, email me if interested!

I'm happy that more people are 'discovering' ClickHouse. ClickHouse is an outstanding product, with great capabilities that serve a wide array of big data use cases. It's simple to deploy, simple to operate, simple to ingest large amounts of data, simple to scale, and simple to query. We've been using ClickHouse to handle 100's of TB of data for workloads that require ranking on multi-dimensional timeseries aggregations, and we can resolve most complex queries in less than 500ms under load.

Also from HN:

v0.39.0.1 / Operations Guide / Running Metabase on Docker

Metabase provides an official Docker image via Dockerhub that can be used for deployments on any system that is running Docker.

If you’re trying to upgrade your Metabase version on Docker, check out these upgrading instructions.

Launching Metabase on a new container

Here’s a quick one-liner to get you off the ground (please note, we recommend further configuration for production deployments below):

This will launch a Metabase server on port 3000 by default. You can use docker logs -f metabase to follow the rest of the initialization progress. Once the Metabase startup completes you can access the app at localhost:3000

Since Docker containers have their own ports and we just map them to the system ports as needed it’s easy to move Metabase onto a different system port if you wish. For example running Metabase on port 12345:

Mounting a mapped file storage volume

In its default configuration Metabase uses the local filesystem to run an H2 embedded database to store its own application data. The end result is that your Metabase application data will be on disk inside your container and lost if you ever remove the container.

To persist your data outside of the container and make it available for use between container launches we can mount a local file path inside our container.

Now when you launch your container we are telling Metabase to use the database file at ~/metabase-data/metabase.db instead of its default location and we are mounting that folder from our local filesystem into the container.

Getting your config back if you stopped your container

If you have previously run and configured your Metabase using the local Database and then stopped the container, your data will still be there unless you deleted the container with the docker rm command. To recover your previous configuration:

- Find the stopped container using the

docker ps -acommand.It will look something like this:

Once you have identified the stopped container with your configuration in it, save the container ID from the left most column for the next step.

- Use

docker committo create a new custom docker image from the stopped container containing your configuration.

- Run your new image using

docker runto get up and running again.Hopefully you have your previously configured Metabase Installation back. If it’s not the one you expected try a different stopped container and do these steps again.

Using Postgres as the Metabase application database

If you are ready to completely move off the H2 embedded database for running Metabase and prefer to use Postgres we’ve got that covered too.

In this scenario all you need to do is make sure you launch Metabase with the correct environment variables containing your Postgres database connection details and you’re all set. For example:

Keep in mind that Metabase will be connecting from within your docker container, so make sure that either you’re using a fully qualified hostname or that you’ve set a proper entry in your container’s /etc/hosts file.

Migrating from H2 to Postgres as the Metabase application database

For general information, see instructions for migrating from H2 to MySQL or Postgres.

To migrate an existing Metabase container from an H2 application database to another database container (e.g. Postgres, MySQL), there are a few considerations to keep in mind:

- The target database container must be accessible (i.e. on an available network)

- The target database container must be supported (e.g. MySQL, Postgres)

- The existing H2 database should be mapped outside the running container

The migration process involves 2 main steps:

- Stop the existing Metabase container

- Run a new, temporary Metabase container to perform the migration

Using a Postgres container as the target, here’s an example invocation:

To further explain the example: in addition to specifying the target database connection details, set the MB_DB_FILE environment variable for the source H2 database location, and pass the argument load-from-h2 to begin migrating.

Setting the Java Timezone

It’s best to set your Java timezone to match the timezone you’d like all your reports to come in. You can do this by simply specifying the JAVA_TIMEZONE environment variable which is picked up by the Metabase launch script. For example:

Additional custom settings

While running Metabase on docker you can use any of the custom settings from Customizing the Metabase Jetty Webserver by setting environment variables on your docker run command.

In addition to the standard custom settings there are two docker specific environment variables MUID and MGID which are used to set the user and group IDs used by metabase when running in a docker container. These settings make it possible to match file permissions when files, such as the application database, are shared between the host and the container.

Here’s how to use a database file, owned by your account, that is stored in your home directory:

Now that you’ve installed Metabase, it’s time to set it up and connect it to your database.

Copying the application database

If you forgot to configure to the application database, it will be located at /metabase.db/metabase.db.mv.db in the container. You can copy this whole directory out of the container using the following command (replacing CONTAINER_ID with the actual container ID or name, metabase if you named the container):

The DB contents will be left in a directory named metabase.db.Note that some older versions of metabase stored their db in a different default location.

Fixing OutOfMemoryErrors in some hosted environments

On some hosts Metabase can fail to start with an error message like:

If that happens, you’ll need to set a JVM option to manually configure the maximum amount of memory the JVM uses for the heap. Referto these instructions for details on how to do that.

Adding external dependencies or plugins

To add external dependency JAR files such as the Oracle or Vertica JDBC drivers or 3rd-party Metabase drivers, you will need to create a plugins directory in your host system and bind it so it is available to Metabase as the path /plugins using either --mount or -v/--volume. For example, if you have a directory named /path/to/plugins on your host system, you can make its contents available to Metabase using the --mount option as follows:

Note that Metabase will use this directory to extract plugins bundled with the default Metabase distribution (such as drivers for various databases such as SQLite), thus it must be readable and writable by Docker.

Use Docker Secrets to hide the sensitive parameters

Metabase Plugin

In order to keep your connection parameters hidden from plain sight, you can use Docker Secrets to put all parameters in files so Docker can read and load them in memory before the container is started.

Clickhouse Git

This is an example of a docker-compose.yml file to start a Metabase container with secrets to connect to a PostgreSQL database. Create 2 files (db_user.txt and db_password.txt) in the same directory as this yml and fill them with any username and a secure password: